SDSC6012 Course 1-Introduction

#sdsc6012

English / 中文

Time Series Definition

Core Concepts

-

A time series is a sequence of data points indexed in chronological order.

-

Application Areas:

- Economics: Daily stock prices, GDP, monthly unemployment rate

- Social Sciences: Population, birth rate, enrollment rate

- Epidemiology: Number of flu cases, mortality rate

- Medicine: Blood pressure monitoring, fMRI data

- Natural Sciences: Global temperature, monthly sunspot observations

Supplementary Note: Time series are observational records of real-world dynamic processes, with the core feature being that data points are ordered by timestamps.

Time Series Analysis Objectives

Analytical Significance

-

Description and Explanation: Understanding sequence generation mechanisms (e.g., trends/seasonality)

Example: Analyzing long-term warming trends in temperature series

-

Forecasting: Predicting future values

Example: Forecasting next quarter’s unemployment rate

-

Control: Evaluating the impact of intervention measures

Example: Assessing the effect of monetary policy on unemployment rate

-

Hypothesis Testing: Verifying theoretical models

Example: Testing the global warming hypothesis

Time Series Models

Basic Decomposition Model

Formula Explanation:

- : Observed value at time

- : Trend component (long-term change trend)

- : Seasonal component (periodic change pattern)

- : Residual component (random fluctuation/noise)

Stochastic Process Perspective

-

A time series is one realization of a stochastic process

Supplementary Note:

- Stochastic process = “Natural law” generating the sequence (theoretical model)

- Time series = Actual observed specific data (real-world record)

- Example: Daily 3PM temperature records are a time series; temperature change patterns are a stochastic process

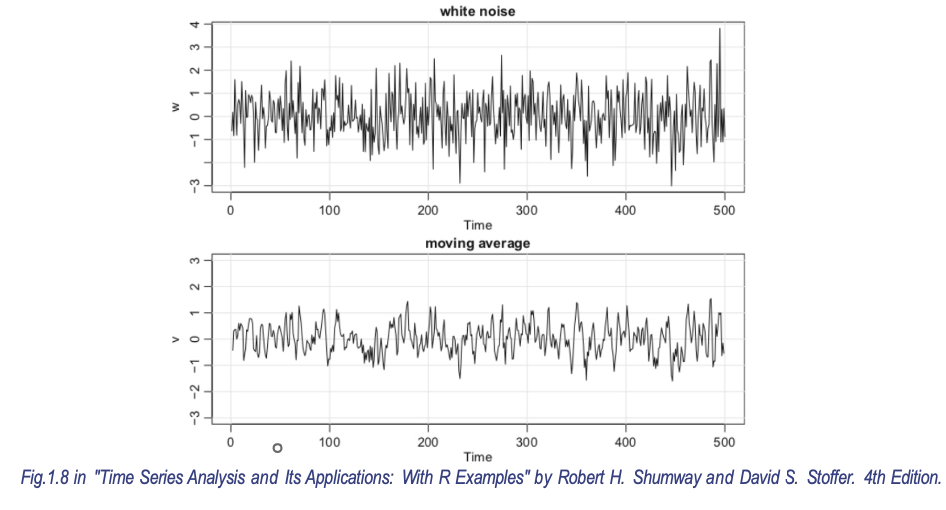

White Noise

Strict Definition

White noise is a stochastic process satisfying three conditions:

-

Zero mean:

-

Constant variance:

-

No autocorrelation:

Mathematical Representation

Key Properties:

- No exploitable patterns (completely random)

- Past values do not affect future values (memoryless)

Gaussian White Noise

-

Special form: follows a normal distribution

-

Cumulative distribution function:

Stationary and Non-Stationary Time Series

Core Definition

-

Stationary time series: Statistical properties (e.g., mean, variance) do not change over time; series behavior is time-independent.

-

Non-stationary time series: Statistical properties change over time; series behavior strongly depends on time.

Supplementary Note: The stable statistical properties of stationary series allow historical patterns to be used for future forecasting (e.g., the pattern “if the previous value is high, the next value decreases” remains applicable).

Problems and Advantages

-

Non-stationary series problem: Continuously changing statistical properties may render past patterns completely invalid in the future (e.g., mean is 100 today but 110 tomorrow).

-

Stationary series advantage: Fixed mean and variance ensure consistent behavioral patterns, enabling reliable forecasting.

Example: Ice Cream Sales (Non-Stationarity)

Data Characteristics

-

Sales peak in summer (July-August) and trough in winter (December-January), forming repeating peak-valley patterns.

-

Since sales strongly correlate with time, it is a non-stationary series.

Stationarization Method: Seasonal Differencing

Formula Explanation:

- : Differencing operator for a 365-day cycle

- : Sales on day of the current year

- : Sales on the same day of the previous year

Operational Meaning: Computes “current year sales minus previous year sales” to eliminate fixed annual seasonal effects.

Moving Average

Purpose

Smoothing data, removing random noise, highlighting long-term trends.

Calculation Principle

-period moving average:

Calculation Example

Original series:

3-day moving average:

-

:

-

:

-

:

Output series:

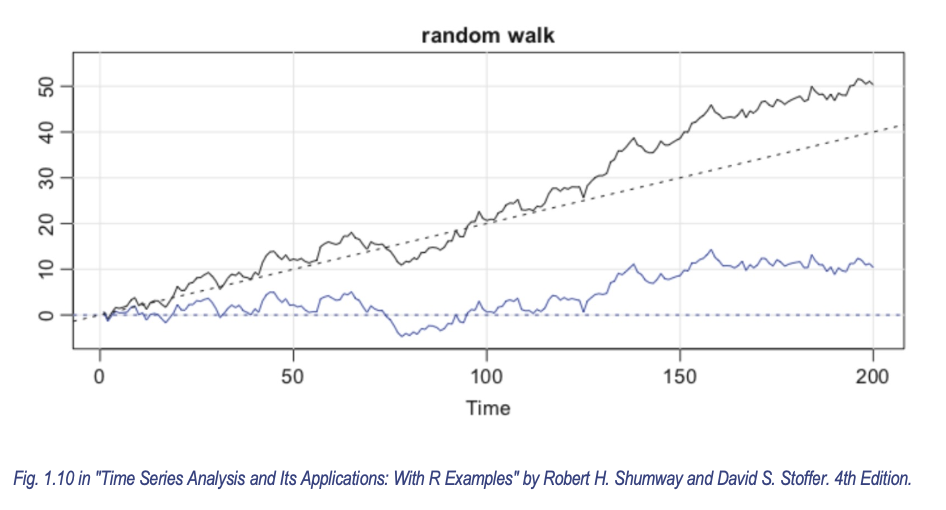

Random Walk with Drift

Basic Model

Formula Explanation:

- : Value at time

- : Drift term (constant)

- : White noise (mean 0, constant variance)

Model Derivation

Recursive expansion:

Physical Interpretation

Analogy:

- Random walk (): A drunkard with random step directions (determined by coin toss)

- Drift term (): The drunkard is gently pulled northward by a rope ( is the pulling force)

- Overall path: Northward pull () + random steps ()

Differenced Form

Key Conclusion: After differencing, it transforms into white noise with a constant term (), making the series stationary.

Signal and Noise

Model:

This model indicates that the observed time series consists of an underlying signal (e.g., seasonal component ) and superimposed noise (). The goal of analysis is to extract the signal from the noise.

Measures of Dependence

Mean Function

Describes the average level of the time series at any time .

Examples:

-

For moving average , .

-

For random walk with drift , .

-

For signal-containing series , .

Autocovariance Function

The autocovariance function quantifies the linear dependence between current and past values in a time series, reflecting internal dynamic dependencies. The core question is: Can changes in a variable at one time be predicted by changes at another time?

Definition:

-

Measures linear dependence between different time points and in the same series.

-

indicates no linear relationship between and .

-

When , .

Examples:

-

White noise :

-

3-term moving average :

-

Random walk :