SDSC6012 Course 4-Autoregressive models

#sdsc6012

English / 中文

Stationarity

Strict Stationarity

A time series is strictly stationary if and only if for any , any time points , and any time shift , we have:

Core Meaning: Strict stationarity implies that the complete probability distribution of the time series does not change over time. Regardless of which time window is selected, its joint distribution properties remain unchanged. This allows statistical quantities obtained from a single time series sample to be valid estimates of population properties.

Weak Stationarity

A time series is weakly stationary if it satisfies:

-

is constant (independent of time )

-

depends only on the time lag , and not on the specific time point

Practical Meaning: Weak stationarity only requires the first moment (mean) and second moments (variance, covariance) to be stable, and does not require the complete probability distribution to be stable. This makes “prediction” possible because the statistical properties do not change over time.

| Feature | Strict Stationarity | Weak Stationarity |

|---|---|---|

| Core Definition | For any set of time points t₁, t₂, …, tₙ and any time shift k, the joint distribution satisfies: F_{X_{t₁},…,X_{tₙ}}(x₁,…,xₙ) = F_{X_{t₁+k},…,X_{tₙ+k}}(x₁,…,xₙ) (All finite-dimensional joint distributions remain unchanged) |

1. E[Xₜ] = μ (constant) 2. Cov(Xₜ, Xₜ₊ₖ) = γ(k) (depends only on lag k, not on time t) |

| Mean | Not explicitly required, but as a corollary, if it exists, it must be constant: E[Xₜ] = μ (constant for all t) | Explicitly required: E[Xₜ] = μ (constant for all t) |

| Variance | Not explicitly required, but as a corollary, if it exists, it must be constant: Var(Xₜ) = σ² (constant for all t) | Not directly required, but since covariance depends only on lag, variance is naturally constant: Var(Xₜ) = γ(0) (constant) |

| Focus | Complete probability distribution | Only the first two moments (mean, variance, covariance) |

Properties of the Autocovariance Function

For a stationary process, the autocovariance function satisfies:

-

(variance is non-negative)

-

(absolute autocovariance does not exceed variance)

-

(even function)

Autocorrelation Function (ACF)

Note:

is the autocovariance function, i.e., .

is the variance of the time series, i.e., .

Standardization Meaning: By dividing by the variance , the ACF is constrained to the range , facilitating comparison of correlation strengths between different time series.

Time Series Analysis

Basic Concept Review

For time series observations , we define the following sample statistics:

-

Sample Mean:

Represents the average level of the time series.

-

Sample Autocovariance Function:

For lag (where ),Measures the covariance between observations separated by time points. When , it is the sample variance.

-

Sample Autocorrelation Function (sample ACF):

Represents the standardized autocovariance, ranging from , used to measure linear correlation.

Simple Example Calculation

Assume a simple time series sample: , i.e., .

-

Calculate Sample Mean:

-

Calculate (Sample Variance):

-

Calculate (Autocovariance at Lag 1):

Asymptotic Properties of White Noise Processes

For a white noise process , if , then the sample ACF satisfies:

-

asymptotic distribution

-

For , is asymptotically normally distributed with mean 0 and variance

Practical Meaning: In large samples, we can use the normal distribution to test the significance of ACF values, determining whether a particular lag has true statistical significance.

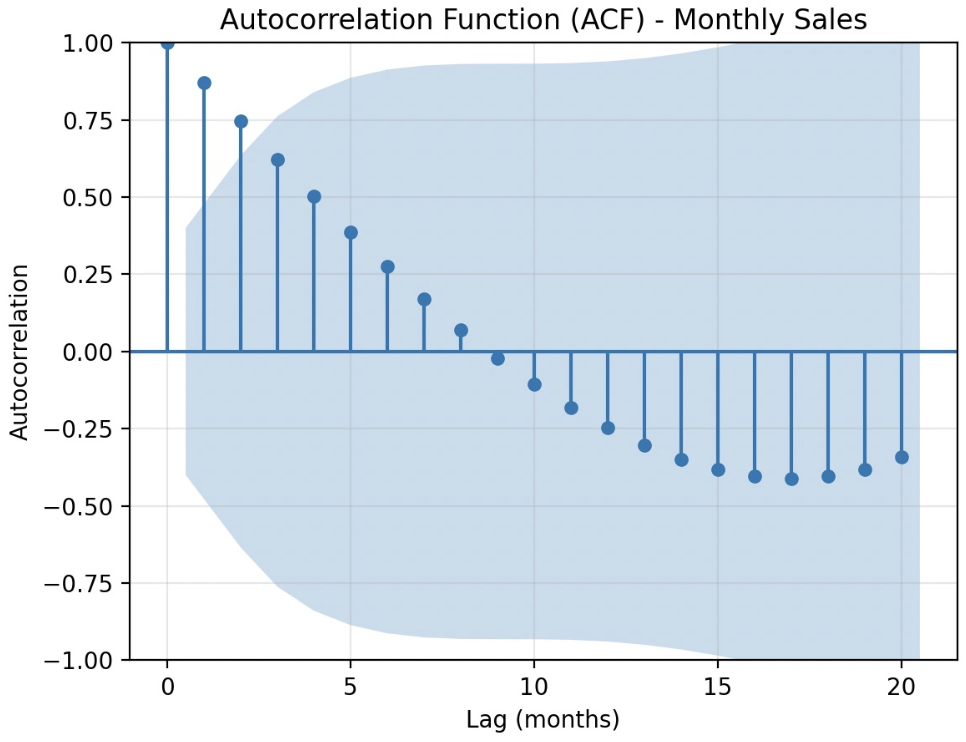

Company Sales Data Case Analysis

Sales data (24 months):

-

Using Python for ACF Analysis:

Code uses thestatsmodelslibrary to plot the ACF and calculate ACF values.- The

plot_acffunction generates the autocorrelation plot, and theacffunction calculates specific values. - Output includes ACF values for the first 10 lags, e.g., Lag0:1.0000, Lag1: high value (due to growth trend), etc.

- The

Time Series Operators

Backshift Operator

-

Definition:

-

Multiple Applications: (shift backward by k time units)

Example:

Assume a time series:

Forward-shift Operator

-

Definition:

-

Multiple Applications: (shift forward by k time units)

-

Relationship: ,

Example:

Using the same series:

First Difference Operator (Eliminating Linear Trend)

Definition and Calculation

-

Definition:

-

Calculation: (calculate the change between consecutive observations)

Working Principle Analysis

-

Current Value Component:

-

Previous Value Component:

-

Combined Effect:

Complete Calculation Example

| Time(t) | Observation(xₜ) | Difference Calculation Process | Difference Result(∇xₜ) |

|---|---|---|---|

| 1 | 10 | - | Missing |

| 2 | 12 | 12 - 10 = 2 | 2 |

| 3 | 14 | 14 - 12 = 2 | 2 |

| 4 | 16 | 16 - 14 = 2 | 2 |

| 5 | 18 | 18 - 16 = 2 | 2 |

Result Analysis: The differenced series is [Missing, 2, 2, 2, 2], constant difference values indicate the original series has a perfect linear trend.

d-th Difference Operator (Eliminating Higher-Order Trends)

-

Definition:

-

Application: Used to eliminate polynomial trends; d-th difference can eliminate a d-th degree polynomial trend.

Empirical Evidence of Trend Elimination by Difference Operators

Mathematical Proof of Linear Trend Elimination

When the time series has a linear trend:

First Difference Calculation Process:

Conclusion: The linear trend term is completely eliminated, leaving only the constant term and the difference of the stationary component.

Mathematical Proof of Quadratic Trend Elimination

When the time series has a quadratic trend:

First Difference Result:

Second Difference Calculation:

Conclusion: The quadratic trend is completely eliminated, leaving only the constant term and the second difference of the stationary component.

Linear Process

Definition

A time series is called a linear process if it can be expressed as:

where:

-

(white noise process)

-

is the mean of the process

-

are weight coefficients satisfying absolute summability:

Component Analysis

-

Causal Part: , indicating the current value depends on present and past shocks

-

Non-causal Part: , indicating the current value depends on future shocks

-

Absolute Summability Condition: ensures the weight coefficients eventually decay to zero:

Relationship with AR Models

Important Conclusion: All stationary AR models are special cases of linear processes, but not all linear processes are AR models. AR models store “memory” in their own past values, while linear processes express through weighted sums of white noise shocks.

Linear Processes and Autoregressive Models (AR)

Core Relationship

The relationship between linear processes and autoregressive models can be summarized as:

“All (stationary) AR models are linear processes, but not all linear processes are AR models.”

Example Illustration

-

Linear Process: Like a broad “model family”, containing various types of models

-

AR Model: A “specific and widely used member” of this family

Key Difference:

-

AR models store “memory” in their own historical values ()

-

Define the current value through linear combinations of past observations

Autoregressive Models (AR)

Intuitive Understanding

The core idea of AR models: the current value of a time series can be explained by a linear combination of its past values.

Mathematical Expression:

where:

-

are autoregressive coefficients

-

is the white noise term (random shock at the current time)

Examples of AR Models of Different Orders

AR(1) Model (First-Order Autoregressive)

Model Form:

Practical Meaning: Only considers the effect of yesterday on today

Specific Example:

Assume , then:

AR(2) Model (Second-Order Autoregressive)

Model Form:

Practical Meaning: Considers the combined influence of yesterday and the day before yesterday

Specific Example:

Assume , , then:

Practical Application Example

Stock Market Price Prediction

Assume a stock’s daily closing price follows an AR(2) model:

This means:

-

60% of today’s price is influenced by yesterday’s price

-

30% is influenced by the day before yesterday’s price

-

The remaining 10% comes from random fluctuations

Applicable Scenario: AR models are suitable for data with trends, i.e., where the current value depends on past observations.

Operator Notation

Backshift Operator

-

Definition:

-

Multiple Applications:

- (shift backward by 2 steps)

- (shift backward by p steps)

Operator Form of AR Models

Convert the AR(p) model:

to:

Define the autoregressive operator:

Finally, obtain the concise form:

Operator Interpretation

is not just an abbreviation; it represents a system or filter:

-

Input: Original time series

-

System: (defined by parameters )

-

Output: White noise

Meaning: If we filter the original series through this system, all predictable patterns that can be captured by past values will be removed, ultimately outputting pure random white noise.

Detailed Analysis of AR(1) Model

Model Form

Solution Process

By successive substitution:

Continue this process n times:

Operator Solution

Using the backshift operator:

Apply the inverse operator (valid when ):

Causality and Stationarity

Causal Process

A time series process is called causal if its current value depends only on:

-

Present and past inputs/shock processes

-

Does not depend on future values

Causality Condition for AR(1) Model

The AR(1) process is causal if and only if:

Condition 1:

Condition 2: The root of the polynomial satisfies

When : The process can be expressed as , depending only on past and present noise.

Non-causal Case

When , the process is non-causal, depending on future noise:

Stationarity Condition for AR(p) Models

An AR(p) model has a stationary solution if and only if all roots of the autoregressive characteristic polynomial:

lie outside the unit circle (i.e., the modulus of all roots is greater than 1).

Example: Checking Causality

Example 1: AR(2) Model

Consider the model:

Characteristic polynomial:

Solve the equation:

Roots: ,

Since (on the unit circle), the process is not causal.

Example 2: AR(2) Model

Consider the model:

Characteristic polynomial:

Solve the equation to get roots: ,

Since and , the process is causal.